Teaching methods: Pedagogical challenges in moving beyond traditionally separate quantitative and qualitative classrooms

Jo Ferrie1 and Thees Spreckelsen1

1University of Glasgow, Glasgow, UK

Abstract

In the social sciences, research methods courses may be the most important and yet the most undervalued. Quality Assurance Agency (QAA) benchmark statements that deliver UK national standards all signify that methods teaching is essential. In terms of applicability, learning how to evaluate and produce knowledge has high relevance for employability. In the UK, despite being ‘core’, methods courses, compared with disciplinary courses, tend to have lower institutional investment utilising relatively small and early-career teaching teams and are taught to large groups of students (Ferrie et al., 2022). This paper draws together literature on the pedagogy of methods with reflexive practice to critically consider the barriers to learning methods and considers what methods educators and learners can do to engage optimally with the learner experience. This paper begins by considering how teaching of numeric- and text-based data produces a different learning environment to disciplinary learning. It then considers how quantitative pedagogy is distinct from qualitative pedagogy and in turn perpetuates the idea that they are distinct forms of knowledge production. The ‘pine tree’ and the ‘oak tree’ are two pedagogic devices that help students transform their learning practices. Growing calls to mix methods in course presentations and transcend the qualitative/quantitative divide present new challenges for pedagogy. The paper will outline a third pedagogic device that has helped students grasp something of a holistic understanding of how research methods operate. The ‘dirty greenhouse’ analogy, used with undergraduates and postgraduates (in large joint social sciences course at a large UK university), helps students scaffold their learning and get a quick grasp on what the value of methods courses are and avoids pitching qualitative and quantitative paradigms ‘against’ each other. The paper will argue that students are aided by having visual frameworks that help them, enhancing their critical approach to knowledge production and understanding better what a ‘defence’ in our research writing aims to do.

Keywords

pedagogy of methods, methods, teaching methods, qualitative learning, quantitative learning, social research methods

Introduction

Teaching methods is tough (Earley, 2014). Despite methods training being a core learning outcome of social science Quality Assurance Agency (QAA) benchmarks, in many ways, students are signalled to consider methods learning as an ‘add-on’. Often core undergraduate methods learning in the UK is delayed (to year 2 or 3 of the degree) for example. It is widely accepted that students struggle to invest in learning methods, with well-taught courses still getting challenging student feedback (Scott Jones & Goldring, 2014). While methods courses are an essential component of undergraduate social science programmes (Coronel Llamas & Boza, 2011), ‘doing research’ is not typically considered by incoming students as ‘the point’ of their social science degree (Markham, 1991), leading often to avoidance.

Perhaps this explains one piece of evidence from our own practice: consistently for seven years on school-wide undergraduate core methods courses (2012-2020, around 200 students per year), students on the quantitative course, earned a grade, on average, depressed by one third of a grade compared to their average grade across their disciplinary classes (if their average grade was a B plus, they tended to earn a B on quantitative research methods). Interestingly, students did better on the quantitative course than students on the qualitative course (around 150 students took both courses, and the qualitative class size was usually between 200-250 students) by on average, one third of a grade (if the quantitative grade was a B, then the qualitative grade produced was on average B minus). In our experience (and reinforced by the tiny literature on pedagogy and qualitative methods) there is lower resistance to learning qualitative methods compared with quantitative. Yet, the grade-outcomes suggest a problem still exists.

While one or two depressed scores won’t be enough to impact on a student’s degree classification, it is clear that dedicated methods teachers are not able to convert their enthusiasm, skill and passion into grades with the same ease as disciplinary colleagues. This paper will explore potential strategies for engaging students differently and will argue that the integration of qualitative and quantitative learning into a single course may well make the difference.

Literature: Why teaching methods is tough

There is a reluctance around learner engagement with methods and this is rooted in individual attitudes and reinforced by structural barriers. Barriers to creating adequate and accessible methods learning spaces are well rehearsed and include structural issues:

· a reluctance by universities to protect curriculum space for methods learning, on the basis that it may put students off from applying (MacInnes (2014); McVie et al. (2008); see Scott Jones and Goldring (2015) for a neat overview);

· large class sizes, often in their 100s, are far larger than the size of classes in their optional honours courses (usually capped between 20-40 students) and consequently have a wide diversity in learning needs (for example, complete novices to those with quite advanced understanding) (Ferrie et al., 2022)

· semester-long courses require a lot of repetition of key learning points limiting students’ engagement (Ferrie et al., 2022); and

· particular to quantitative courses, the time-gap between studying maths (Hodgen et al., 2010) and beginning a numerate methods course causes problems.

And individual resistance:

· the rising scrutiny of a good student experience across the Higher Education (HE) sector causing courses with mixed reviews to be seen as unattractive to institutions (Scott Jones & Goldring, 2015), and confusing enjoyable learning with high value learning;

· the reputation that builds up in student folklore around methods courses being ones to ‘endure’, (MacInnes, 2014; Wathan et al., 2011) and as mechanistic rather than informing (Buckley et al., 2015);

· students reluctant to engage with ‘difficult’ subjects (D'Andrea, 2006; Humphreys, 2006; Williams et al., 2008); alongside ‘maths anxiety’ (Scott Jones & Goldring, 2015); and

· the expectation from many non-STEM (Science, Technology, Engineering and Maths subject areas) students (with the exception perhaps of some political scientists, psychologists, linguists and economists) that numeracy will not be a required skill (Williams et al., 2004).

How we (HE educators in the social sciences) ‘deal’ with this problem necessitates some understanding that learning methods is different to learning disciplinary content. First of all, it is different because it represents such a small part of the curriculum. Having only 20-credits (equivalent to 1/6 of annual learning time or around 1/24 of degree learning time) in most institutions has resulted in an almost exclusive focus on skills (for example, doing a chi-square or t-test; interview or focus group). In reviewing the literature around methods and pedagogy, this section will consider how meaningful engagement requires learning beyond skills, towards theory and how research skills are utilised within a research project. As well as the focus on skill-only, this section will examine the difference between how students learn to engage with their disciplinary/substantive courses and how they must adjust their learning approach when engaging with methods. It is argued that this disruption increases their resistance to learning methods.

Within UK HE, in disciplines where the content is not controlled or learning deemed essential by an external body (for example, the content of veterinary medicine degrees in the UK are controlled by the Royal Veterinary College and classes cannot be missed), there is a tendency for students to attend only some lectures and seminars/tutorials within a course and this creates a second kind of problem for methods teachers. Indeed, so significant is this issue, that maintaining/improving attendance is a field of study in pedagogical literatures (for example, Büchele (2021)).

Disciplinary/substantive educators preach the value of attendance, sometimes making attendance mandatory. Yet, students have learned from experience that the highest return for investment in disciplinary learning, in terms of grades, is to attend only the parts of the course that will be assessed and examined (MacInnes, 2018). Using lecture time to revise ‘just enough’ material to pass the exam results in stronger grades, but leaves learning gaps that could impact on ‘later’ learning objectives as they progress through their degree.

In methods learning, particularly when learning how to work with numeric data, the learning is incremental and students must engage with all the lessons, and in the order presented. A methods lecturer telling the students this at the beginning of the year, however, is preaching the same message that disciplinary teachers advocate. And by year 2 or 3 of their undergraduate degree, students have learned to ignore the advice. The eventual realisation that attendance really is required when learning methods leaves students feeling that they are being asked to work harder in methods classes (MacInnes, 2018). Students are further challenged when they appreciate that they will be examined on new skills, another source of anxiety and resistance. John MacInnes (2018) writing on the pedagogy of quantitative methods discussed the learning hump, once students have climbed to the top of the hill, and something has ‘clicked’ then the rest of the learning feels like free-wheeling downhill. The trick of course, is to get students to invest in the uphill struggle. This is tough, when the learning environment is a disruption.

Related to all these barriers is a paradox in the way methods, particularly those that use numeric data, can be taught in absolute ways with objectivity often declared as a ‘gold standard’. If continuous data are being used then often raw data can’t be contested. Categorical data however, are constructed in largely subjective ways. Strong students struggle with the lack of acknowledgement of this in course materials often, in our experience, assuming that it is a fault of their own logic that they see the limitations of research designs. This is reinforced by textbooks and peer review journal articles that minimise the messiness and subjective-engagement researchers have with all elements of their projects. As methods teachers, we know research is imperfect. Students asked to invest in difficult learning can feel gaslit by this admission. The critical thinking skills students possess are equally impactful on qualitative methodologies with learners quick to see that generalisability can’t be achieved, asking: what’s the point? Linked to this, are voices from academic colleagues who add their critical voice against methods courses that are too shallow, too partial in their scope (and paradoxically it is these arguments that prevent methods courses from taking up more of the curriculum required for depth to be reached).

Perhaps the problem lies also in the way we teach disciplinary courses? We argue that all courses could evolve the dynamic between student and the new knowledge they acquire. There are many exceptions, but generally, in our view, there is more emphasis on students developing their ‘thinking’ rather than seeing themselves as practitioners. Thinking, particularly critical thinking, is highly valued and valuable; but examining what we ‘do’ with our intellect is also a valid a use of disciplinary classroom time. Culturally, educators in UK HE may tend to perform the role of reproducers, rather than producers of knowledge. Disciplinary teachers rarely discuss research practices in lectures (Ferrie et al., 2022), emphasising findings, and are often shy to include their own work in reading lists. As a result, ‘doing research’ becomes hidden, undervalued and students implicitly learn it is of less ‘value’ than learning about research findings. Therefore, when the practice-focused methods classes come along, students are resistant because the learning is disassociated from their disciplinary courses and the learning experience is quite different. One solution is to better expose students to research practice and the process of knowledge production in disciplinary classrooms.

Another solution is for methods teachers to shift their pedagogy from a skill focus, to one that builds a learning bridge to disciplinary themes (though this is tough with large interdisciplinary classes and only 20-credits of teaching time). There has been some, but limited, published work on the pedagogy of research methods generally (Hesse-Biber, 2015; Wagner et al., 2011). One exception from Kilburn et al. (2014) argues that three goals should direct teaching: first to make research visible by exposing students to research processes, then to give students practice in doing research, and finally giving students space to critically reflect on what and how they have learned: a skill valued by non-academic employers (Carter, 2021).

Still, there remains a problem for learners in engaging with the very different skills taught in quantitative methods and qualitative methods. Within the academy there are deep-rooted factions, with those preferring numbers arguing for the authority of objectivity dismissing the explorative approach of those working with words. Too few acknowledge how easily numerical data is manipulated or expose the elitism in methodological approaches that require training, equipment, and technologies. Perhaps the alternative view is that quantitative social scientists de-humanise and abstract pervasive social problems in harmful ways. The separation of quantitative and qualitative courses is ideological and historically rooted. If methods were taught, in UK HE before 1970, they were dominated by quantitative skills prior to the critical turn which coincided with the inclusion of women as faculty members from the 1960s onwards. The steady increase towards dominance (in terms of numbers) of qualitative researchers in teaching positions coincided with the introduction of social science courses in UK HE. By the 2000s, there was growing alarm that the quantitative social science teaching force were in decline and significant investment was required to revive quantitative teaching teams (MacInnes, 2014).

The teaching of quantitative methods in the UK has been given healthy investment in recent years thanks largely to a collaboration between the Nuffield Foundation, the Economic and Social Research Council (ESRC), and the HE Funding Council in England and Wales, which invested £20 million into undergraduate quantitative methods teaching (Rosemberg et al., 2022). Launching a competitive process, 17 Q-Step Centres were formed to deliver a ‘step-change’ in how social scientists use and understand quantitative approaches. At our institution, we have gained from this investment. Our opportunity to craft quantitative learning spaces for students has paid dividends and 72% (average 2018-2020) of all graduates ‘with Quantitative Methods’ (the degree developed through the Q-Step programme) earn a first class honours classification compared to around 25% of graduates in our school and 32% of students on Q-Step degrees at other institutions (Rosemberg et al., 2022). The Q-Step students are explicitly taught critical evaluation skills in their 100+ credits of courses, and these are applied in disciplinary assignments translating into strong performances across their degree. More work could explore the value of advanced methods learning for critical skills. The Q-Step programme resulted in evaluation of new courses producing a wave of literature on teaching quantitative methods. There was no similar investment into qualitative methods in the UK and far less literature that carefully considers pedagogy and the learning environment.

In their paper on teaching mixed methods, Hesse-Biber (2015) usefully calls for teachers to have strong knowledge of both quantitative and qualitative modes of doing research in order to provide students with an adequate theoretical underpinning of knowledge production. As many universities persist with the division of distinct skill-focused quantitative and qualitative courses, it remains an issue that too few methods teachers have knowledge of ‘the other’ in order to adequately make distinct the theoretical underpinnings of the method(s) they teach. We agree with Purdam (2016, p. 258), drawing on Earley, (2014), Garner et al. (2009), and Kilburn et al. (2014), that:

[…] teaching social science research methods requires a more coherent pedagogical grounding and robust curriculum design which integrates theory, practice, and hands on practical training. (Purdam, 2016, p. 258)

And we argue here that this gives methods teachers a different job to disciplinary teachers: and quantitative teachers a different job than qualitative teachers as long as methods are separated into these two approaches.

As methods teachers, it may not be surprising to hear that In our view, learning how to deliver robust and rigorous knowledge is the most important learning that a degree offers (Ryan et al., 2014). If teaching is well done then the student can transform into a researcher confident in producing new knowledge and with it impact, influence, and change. They can build a skill-set and conceptual understanding that can underpin an academic career and these skills are equally valued by industry. But, for the reasons listed above, methods teachers teach in a hostile environment. We champion an engaged and reflexive approach that draws from SoTL to improve our teaching and recognise how difficult this is to achieve given problems of large interdisciplinary classes and small teaching teams. What follows is an analogy we’ve used with methods teachers and students to help them understand this difference between discipline-focused and methods-focused teaching, and understand the implications of this, on how they approach the learning environment.

The ‘two tree’ pedagogic device

Within many UK universities there has been a trend towards teaching qualitative and quantitative methods in separate modules usually with distinct teaching teams. It is our view that students are limited by the structure of methods teaching towards quantitative and qualitative ‘silos’ (Morgan, 2007) which introduce students to skills and concepts but often fail to produce confident researchers. In an attempt to overcome resistance, and to explain to students how they should approach their methods learning, we use the analogy of the tree. First, we will outline how qualitative methods learning is analogous to an oak tree, and then how quantitative methods learning can be likened to a pine tree. Analogies are a useful device in methods teaching (Aubusson, 2002; Mann & Warr, 2017). They help students imagine the knowledge they don’t yet have, around theory, ontology, epistemology, and skills so that new learning can be scaffolded (really tough to do given they don’t know what’s missing from the scaffold).

Teaching qualitative methods: The oak tree

We describe qualitative methods as an oak tree, with multiple trunks that split from each other close to the ground, and this, we argue represents the multiple ontologies available to qualitative researchers (for example, phenomenology focuses upon experience to construct knowledge, and discourse analysis focuses upon language used). Some branches in our oak tree are as thick and sturdy as the single trunk of the pine tree.

Figure 1. The oak tree is an analogy for learning qualitative methods, with some branches being deceptively easy to 'climb’, some being 'grounded' and represents how learning one 'branch' may not help learn another. Image author’s own (Ferrie).

To apply this to qualitative methodologies, the learning of one qualitative approach (ethnography for example), may not help in understanding another (critical discourse analysis for example) as the branches split close to the ground, and climbing onto one will not help a researcher climb onto the other. This analogy helps students understand and visualise how their qualitative course has been designed: and how this should impact on how they approach learning. Each lecture probably will focus on a branch and so some lectures will feel disjointed from the lecture before. Thus, qualitative methods educators have to choose between breadth and depth, and some topics that could be easily extended over a semester, are limited to a one-hour lecture. Using a team approach to qualitative teaching may allow expertise to shine, but as their teaching applies to their own ‘branch’, the teaching may unintentionally obfuscate understanding of how different qualitative methods are connected, and how they are not.

The analogy helps students engage in learning qualitative methods and beware the common mistake of thinking they are ‘easy’. Like an oak tree (Figure 1), some branches are close to the ground and are easy to climb on to, and this captures the strong communication and narrative skills students have, and their ability to generate qualitative data with little training. This part of the analogy can also be used to help students learn that what we mean by ‘grounded’ is that our methods have strong ecological validity, aiming to reflect rich, nuanced contextual depth around lived experience.

The analogy can then be used to make it clear to students that work is required. To do qualitative research well takes careful thought, a rigorous analysis that withstands the scrutiny of an examiner and advanced learning. To produce rigorous findings, they still have to climb along and up the branch (learn) to see beyond the canopy of leaves (to see the research topic or field with as clear a view as possible). Students are encouraged to recognise that each ‘branch’ is as thick as the trunk on the pine tree that represents quantitative methods, and so be prepared to make a similar learning investment. In a postgraduate class we can extend the analogy, describing a branch close to the ground as being very good for exploring lived experience, such as phenomenology. Whereas a branch that extends up, giving us more of a top-down view (once we have learned/climbed and have a view beyond the canopy) can helpfully explain the epistemological strengths of discourse analysis. It is also useful to represent the complexity of qualitative methods with the overlapping and intersecting branches supporting students in their growing understanding that some qualitative methodologies, in fact, share particular characteristics (perhaps share a theoretical root, or work well with a particular method such as interviewing).

The analogy will take on more shape in an upcoming section on implications for structuring methods courses, where we consider the ramifications on what teachers ‘do’.

Teaching quantitative methods: The pine tree

Quantitative learning is different. The analogy here is of a pine tree (Figure 2). The climb (learning) is hard, there are no low hanging branches to lean upon, and few branches in the climb up to rest and catch one’s breath. You will need a harness to help, and, in this analogy, this includes learning how to use statistical software as well as learning how data collection and analysis works. New skills are vital then, even for someone who is maths confident. It is incremental, in that each stage must be climbed (understood) to access the next part of the tree, and the climb up can feel endless. Getting halfway up the tree can take a lot of effort, but still might not provide a clear view, and here students need to be encouraged that this is normal, and to trust that their efforts will be rewarded. They are unlikely ever to have got through half a disciplinary course during their time at university, where they have attended and engaged, and felt this lost. With this analogy, from the first lecture, we can reassure students that these feelings are normal, and that they will get to the top of the climb and be able to see for miles if they trust in the educator and the learning space we have crafted (we become another kind of ‘harness’).

Figure 2: The pine tree is difficult to climb and must be done in incremental stages. The climb though, does reveal clear views from the top. Image author’s own (Ferrie).

We also argue that like a pine tree, the branches at the top of the climb are relatively clustered, and while they may go in different directions from the trunk (frequentist versus Bayesian approaches?), they share a general ontological foundation. Thus, students who gain confidence in basic statistics will find it easier to learn advanced topics. The pine tree analogy also helps students see the limitations of a quantitative approach; you can see a long way, but you can’t see a lot of ground detail.

We use this analogy at the start of various undergraduate and postgraduate courses to explain why students should attend all lectures and to practice their new skills, in a similar fashion to learning a new language. We tell them that they should rehearse learning, and we will use repetition in the lectures and in their lab workbooks. If this rehearsal of key concepts starts to feel boring, we say that’s because they’ve achieved that incremental learning stage and are ready to level up. We can also point out that they can’t miss out learning; this is analogous to jumping up the tree rather than climbing up, and this will increase their feelings of anxiety, so any missed classes need to be caught up. We tell them assessments require the whole course to be learned. Frustratingly, there are always a few students who in the course evaluations write ‘I wish I’d listened’. The analogy of the pine tree resonates for most though and prepares them to approach their learning environments differently to disciplinary classes. From the first year of using these images at the start of teaching, they have been specifically mentioned in student feedback as a strength of courses. What these analogies do well then, is help students adjust their learning approach. They do not help students become motivated: for structural and individual barriers remain. Further, in their own way, they reinforce the divide between qualitative and quantitative methods. Not only do they communicate that a new/different learning approach is required relative to disciplinary learning, but that each course needs its own approach. In the next section we consider implications for teachers before introducing the ‘dirty greenhouse’ analogy which can help motivation as students can begin to imagine the ‘whole’ of research design, and how each learning ‘unit’ will help them develop. The analogy attempts to remove historical hierarchies, or imposition of what knowledge forms have the most authority. The analogy presents knowledge production as a unified framework consistent with a mixed methods approach. We believe it is a helpful foundation even if the student then goes onto to learn qualitative approaches in a separate class to quantitative.

Implications for structuring methods courses

To teach well, methods educators must also embed an appreciation of theory and disciplinary knowledge required for students to engage critically with methods learning (Kilburn et al., 2014). This is very tough to do: exacerbated by our institutions rewarding specialisms rather than generalism; and methods teaching often being taught across a number of social science subjects in the name of rationalism with consequently very large class sizes (Ferrie et al., 2022).

Learning environments must be ‘relational’ to disciplinary learning, rather than skill-focused (Skemp, 1976), and connectionist (Buckley et al., 2015) in that students actively and critically evaluate the value of their methods learning for its relevance to their degree. It is a big ask for educators to expect students to be able to build such theoretical bridges (between methods and disciplinary knowledge) themselves, they have not been asked to do this elsewhere in their degree. Nind and Lewthwaite (2018) captured this in finding that methods teachers need Pedagogic Content Knowledge: the combination of knowing how to teach, the methods skills that need to be learned and the disciplinary content that makes this learning feel relevant.

So: methods teachers hold a great deal of knowledge, but how do they start their teaching? If skill-focused, then they lack the connection to disciplinary relevance required to motivate students. If they use a research project as an exemplar, they will engage those with an interest, but struggle with those not interested in the topic. The next section outlines a teaching device that helps students get ‘a handle’ on what research methods are, and how they operate.

Three shifts in teaching research methods

The challenge described above necessitates three big shifts within our approach to methods teaching. First, our degree curriculums should evolve: methods need to be given due and explicit consideration from first year undergraduate. Any delay is interpreted by students as methods not being a priority area. First-year dedicated courses called ‘methods’ may not be needed: method weeks embedded within introductory curriculums would work well.

Second, methods assessments should look similar to what students are used to, for example, fully referenced critical arguments. There may be a departure from essays to a portfolio or report, though any departure from the ‘norm’ will be experienced by students as a disruption and increase their feelings of anxiety. To avoid feelings of vulnerability, educators could explain and justify how the assessment will add value, and how they will be assessed. For example, arguments that producing work in a report format will benefit their dissertation work and/or be attractive to employers should be explicitly stated.

Finally, fusion of quantitative and qualitative approaches will help students learn about how to produce robust and rigorous knowledge. What follows is further discussion of this recommendation using a final analogy.

The ‘dirty greenhouse’ pedagogic device

Greenhouses are used in UK gardens, as they are globally, to grow plants that need particular growing conditions. They work best when the panes of glass that form the construction are clean. In some UK gardens they are not. Their age and our wet weather and consequently muddy gardens generally mean that in fact, our greenhouses are dirty, or at best, a bit smudgy. In practice it means that the non-expert observer (a visitor to someone else’s garden for example), may get an impression of what is going on inside the greenhouse but not much more. To be able to count the number of tomato plants inside, or appreciate the range of plants growing, or understand how close tomatoes are to ripeness, the visitor requires clean glass. The concept of a dirty greenhouse is used as an aid to methods teaching.

|

|

|

Figure 3. A dirty greenhouse, with thanks to the Edson family. Images author’s own (Ferrie).

We have used this analogy in a number of learning spaces: undergraduate core quantitative method; undergraduate students on advanced quantitative methods learning track through the Glasgow Q-Step Centre (ESRC & Nuffield funded): post-graduate advanced qualitative methods students; and a range of training events run with the Scottish Graduate School of Social Sciences (Scotland’s ESRC funded Doctoral Training Partnership for PhD students). As an analogy it has worked enormously well to create a lightbulb moment for students, and specifically has helped students understand where/how their current methods learning ‘fit in’ to broader knowledge of what methods are and what they can do, even from an introductory learning stage. This has been equally useful to PhD students grappling with methodology chapters where their advanced/specialised method needs to be presented within a convincing narrative of strengths and limitations, and what they could have done differently.

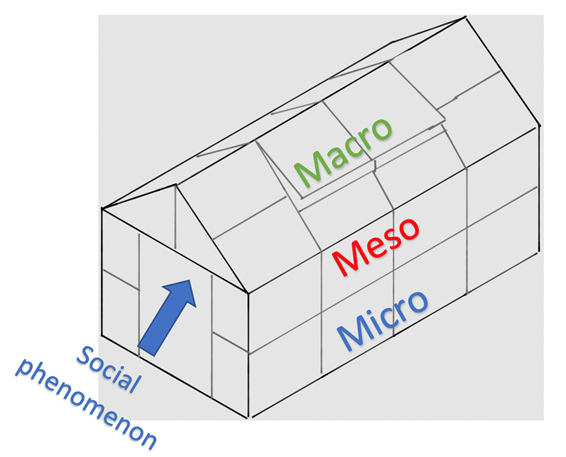

If we take this analogy and apply to social research methods: Growing inside our conceptual greenhouse is a social phenomenon (by which we mean a topic of research interest). It could be something local or grounded that has taken our interest, like the role of activism in an urban school to teach students about citizenship, or it could have a more structural focus, like the role of activism in global education programmes. Our greenhouse, to extend the analogy to students, is constructed around the social phenomenon and is dirty.

Students have read the books and articles that have told them about the social phenomenon, but to see for themselves, they must do research, that is, clean the greenhouse to examine the social phenomenon inside. They don’t have time in their dissertation to clean it all. The literature review they conduct gives them enough understanding, of what others have ‘seen’ as they have looked through their ‘pane of glass’ and will help students defend which pane of glass they are going to clean to get their own view. The first image students are shown, features in Figure 4.

Figure 4. The dirty greenhouse analogy - a metaphorical scaffold presenting micro, meso and macro approaches to research. Image author’s own (Ferrie).

The starting point for educators, is to help the students set up their metaphorical scaffold around their social phenomenon of interest. We ask them to consider their upcoming dissertation or thesis and what they would like to research? If they are interested in real people and their relationship with the phenomenon, then their research will have a grounded or micro-focus. If they are more interested in how a community interacts with the phenomenon, then their research will have a meso-focus. Finally, if they wish to have a view from above, to examine the experience of the phenomenon at the population level or examine social structures, then their research will focus on a macro-level. This is conceptual understanding and does not yet link to skill learning. It is foundational learning that helps students build confidence in what research is, and how they will approach decisions around what research they will do. At this point in their learning, they are asked to consider which level the pane of glass they wish to look through, is located.

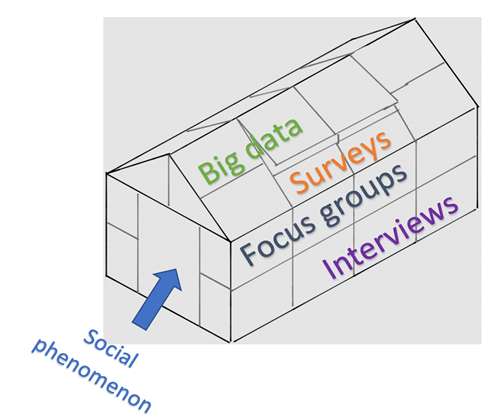

The next version of the dirty greenhouse (Figure 5) is designed to build their knowledge towards particular methods. Which pane of glass (method or research question) they choose will give them only one perspective on the phenomenon, and as they learn how to ‘do’ research methods, they will grow their understanding of both the strengths of what they can see from their chosen approach, but also the limitations. Right from the earliest stage of their learning then, students are encouraged to consider that no research project can be perfect; it will always offer a ‘partial view’.

We are able to move to a second type of learning point, about their role as researchers. Their job is not just to look through the pane of glass, but to clean it, and how well they clean it will speak to the credibility of the evidence they present. In quantitative methods, this may be understood as validity and reliability and these will be discussed later in the paper. In qualitative methods, this may be understood as transparency.

Figure 5. The dirty greenhouse analogy - a metaphorical scaffold presenting skills and methods. Image author’s own (Ferrie).

Each pane of glass becomes understood as a particular research question that the student must form as they approach their research practice. Their question and chosen method must be aligned and the greenhouse analogy can help students visualise what this means. The image (Figure 5) works well to help students see why skill-learning, particularly around software, is a key part of their learning. They can see, how it will fit into their research practice.

For undergraduate learners in particular, and anyone new to learning methods, using a scaffold like the greenhouse analogy reduces anxiety as it helps them conceptually understand that the course or their later dissertation/thesis will not require expert knowledge of how to do research using all the methods that they will be exposed too. With their dissertation in particular, examiners recognise that their learning opportunity is limited, though they are expected to demonstrate robust learning around one part of the puzzle, that is the method they use in their research. Their exposure to other methods, methodologies and forms of analysis will help students appreciate the wider landscape of knowledge about their social phenomenon and how their research sits relationally to it.

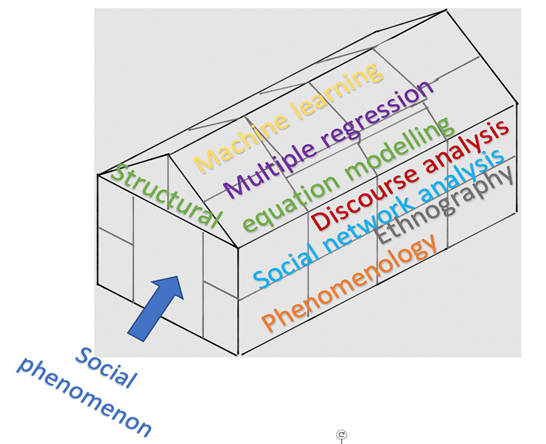

Figure 6. The dirty greenhouse analogy - a metaphorical scaffold for methodologies. Image author’s own (Ferrie).

The key strength of this analogy though, is that, with very little knowledge from students, they can understand what methodologies are (Figure 6). For example, the differences between phenomenological research (a pane towards the bottom of the greenhouse that allows scrutiny of grounded in-depth experiences); a critical discourse analysis approach (a middle pane that allows us to look down at experiences though not with as much depth, and up to see some of the power structures at play, again with limited exposure to either); or structural equation modelling (another middle pane that allows us to see multiple factors at play and how they work together to form a reality of the phenomenon) and then big data or epidemiology (undoubtedly a higher pane, possibly in the glass roof that allows us to look at the phenomenon as a whole); and mixed methods (we get to open the door of our greenhouse and look in, though, we still only see one facet of the phenomena, we may be able to get a sense of how it exists as a whole, and how it is experienced by the few).

Particularly for new methods learners, the image captured in Figure 6 is more challenging for students because they have, generally speaking, very limited understanding of what the terms mean: it is a new vocabulary. They are also unlikely to learn these methodological approaches in undergraduate or post-graduate introductory classes. Nevertheless, it is important to expose students to these approaches at this stage because their wider reading, particularly when reading peer-reviewed journals for their disciplinary classes, will involve this terminology. Even a very basic understanding of what they ‘do’ in terms of learning about a social phenomenon is valuable. The image in Figure 6 is the first one that we believe can be contested and this is highlighted to students. For example, social network analysis tends to collect many data points from relatively few people and for this reason has been placed as the lowest of the approaches that use numeric data: but each individual research project must be assessed for what it does. Similarly, the relative positions of discourse analysis and ethnography may not hold true given two particular pieces of research. What the image captures is what is generally expected for each of these methodologies. Encouraging students to think critically about the research they are exposed to is a key learning point. In the first lecture then, students are prepared to accept that even within a method, or methodology, there is flexibility in how the research is produced, and the knowledge that is subsequently gained (and therefore for where it may ‘sit’ on the greenhouse).

The greenhouse scaffold avoids binary or hierarchical (where one is seen as more important as most famously in ‘the hierarchy of evidence’, Evans (2003)) thinking about qualitative and quantitative approaches because they can co-exist in the greenhouse.

The paper will now unpack each of these elements with careful consideration of how this can be taught, and how it helps students understand the value of the practical aspects of the learning space, thus the contribution of the paper becomes pedagogical in focus.

Teach students to clean each pane thoroughly

A major challenge of teaching students about methods at our institution sits with the courses being compulsory in the honours year of study. This is typical across many UK universities. In our institution, this is the first year where students have really had the power to curate their own curriculum. The freedom to complete any course from a wide range of disciplinary gems is wonderful for students and recognises them as sophisticated agents who have learned enough to be given this power. And from this gloriously exciting position, they are told they must do quantitative methods in semester 1. Some also have to sit qualitative methods in semester 2, but this rule is not universal across our school, adding to the rumbling disgruntlement from those that ‘have to’ do both.

Neither the undergraduate course (the large honours course of >200 students) or our Q-Step students (with typically >30 students) use assignments that are focused on demonstrating numerical skill alone. Rather they all focus on research and data literacy. For the larger quantitative and qualitative courses, their first assignment is a critical review of a good research article. Each article (students choose one of five) is linked to a disciplinary area in the school with strong disciplinary learning elements. Thus, this assignment, as with the courses in general, links heavily to the learning environments structured in disciplinary courses across the school.

In preparation for this review, students are introduced to the greenhouse analogy and taught how to clean a quantitative pane of glass. That is, they are taught how to use ideas of replicability, validity and most importantly, concepts of measurement as foundations for a robust research design that allows them to see their social phenomenon of interest, clearly. They are taught about scaling and sampling, to help them see that they may only be able to view a small aspect of the phenomena, and that they should not be tempted to claim their perspective is wider than it is. Rather, we reinforce the point that extracting robust learning about a small group is superior to flimsy or over-estimated claims about a larger group. In turn, this learning is placed within the context of their own dissertations and how they may use these concepts to demonstrate rigour and defend to an examiner.

With the assignment, they practice applying this knowledge to a critical evaluation of good, published work. Using a framework like the greenhouse to be critical helps students find the right tone and avoid arguing that the article’s authors were wrong or bad researchers, that the student knows better (though they may), or that the research evaluated is worthless. They can practice pointing out the limitations of the research, either in how well the pane of glass was cleaned, or whether the correct pane of glass (method/research question) was selected to see the social phenomenon. Since introducing the greenhouse analogy into teaching, students are much less likely to critique the researcher, and more likely to focus on aspects of the research design that could have been performed, or explained, better.

The use of the greenhouse analogy gives students confidence, in understanding what a ‘good study’ looks like, and in terms of the practice available to convince someone they know (through writing the critical review) and also to understand that their undergraduate dissertation cannot be perfect, that it will always have some limitations, just like the peer-reviewed published articles they have critically reviewed. The analogy has both helped students invest in methods learning, and to see how it may be applied within other parts of their degree.

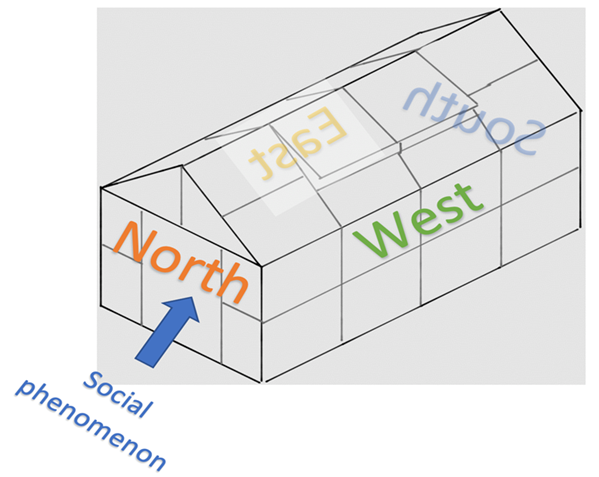

Figure 7. The dirty greenhouse - a metaphorical scaffold for understanding the role of colonisation in research. Image author’s own (Ferrie).

A final point here, as the analogy can encompass all methods/methodologies; it helps students in one class (say quantitative as in our school this is delivered first) appreciate the value of their investment (in learning quantitative methods) not because they intend to apply quantitative skills in their dissertation, but because they will need to engage with a broad literature including quantitative evidence. The analogy is used exactly the same way when teaching qualitative students. Even those with advanced methods skill value the framework that avoids the quantitative and qualitative binary because as they mature as researchers, they come to understand how limiting binary thinking is.

Another image of the greenhouse (Figure 7) is used to introduce the concept of decolonisation with regards to research practice. Part of the debate around decolonising the university is about the northern and western dominance of ideas and the universalism that is still assumed. It is helpful for students to see that their research work, if dominated by northern/western literatures and located in northern/western geographies, ought not to assume relevance to people living in southern/eastern geographies. Again, they are presented with an argument that their research will also have limitations and their assignments will help them practice which limitations they have control over and which they do not. We have successfully used this image (Figure 7) to demonstrate how power exists in all research as northern and western ideas dominate in how methods are taught and ask students to help us consider critically: what needs to change? In collaborating with the students on this question, we aim to diminish power imbalances within the classroom between teacher and learner though inevitably this is only partially successful.

Evolving from learners to knowers

One of the challenges undergraduate students face in learning about methods is the transition from learners of things which they thought were facts, to producers of knowledge which they know to be contingent on multiple factors and at best, knowledge that offers a partial understanding of what is, or what is going on in society.

By demonstrating that all knowledge is partial in the social sciences, we are in fact threatening their foundational understanding of the university and the university degree as a gateway to knowledge. This is in part an issue with how schools teach, and the over-emphasis on regurgitation of what is taught, rather than free or critical thinking of what is known. It has been replicated across some methods and substantive methods programmes too where large class sizes have challenged colleagues and assessments are designed to be manageable by teaching teams rather than led by pedagogical theory towards optimising learning. For example, some courses use assessments that generate scores (a point for including the crosstabs table, a point for using percentages rather than count data, for example). Reducing methods down to right or wrong answers removes the social sciences from their syllabus. Error is always present in our research, and our findings are always limited because we are always unable to capture the full messiness and complexity of a social phenomenon. Or we simplify and sterilise our data to the point that what we capture is such a small and isolated aspect or the phenomenon, it’s difficult to see the merit of our endeavours. Rather, we advocate for marks to reward the strength of academic and critical argument presented.

How does the greenhouse analogy help here?

It still distresses many students to learn that most of their knowledge is evidential, not factual. That many students are hitting their undergraduate methods courses not understanding this, shows that our pre-honours teaching could do better in moving students beyond the regurgitation of ‘what you’ve been told’ model.

The greenhouse can be used as a framework. Thus, students can establish the quality, strengths, and limitations of the knowledge they are confronted with. Scary at first perhaps, but with practiced use, they can and should evaluate all the knowledge they are exposed to. This isn’t a methods skill, but a critical thinking skill, and allows students to gain a voice, that is evaluative of the work done by those that teach them. This is quite a leap of power we are giving students. Without a framework, some are unable to make it. In the worst cases, students may graduate with a lower class of degree, not because they struggled with complex intellectual knowledge, but because they struggled to find their voice to challenge and test complex ideas.

One problem students sometimes have when doing their own research, is gaining confidence that what they’ve done is the ‘best way’. From our perspective as methodologists, no way is the best way, though there are certainly ‘wrong ways’. Each pane of glass represents a different way and what we can teach is that as each pane of glass gives a limited perspective of the social phenomenon, then the trick is to get our research question right for the pane of glass/perspective. If we can’t shift our research question to really match with the pane of glass, then we should consider using a different pane (method).

Much can be gained by students for understanding the choices they have available to them in their career. Some of us become experts on looking through one pane of glass: those that become expert social equation modellers; analysers of panel data; phenomenological interviewers; ethnographic insiders; or archivists. To sustain our careers and the seemingly constant requirement from us to produce new knowledge to feed the Research Excellence Framework (REF; in the UK, a pan-university framework that aims to map excellence), a scholar with a particular methodological expertise may flit across different substantive issues, and so become an expert not in the social phenomenon but in cleaning a pane of glass. An alternative, but less common career path is to learn how to, and practice, cleaning as many panes of glass as you can, to arrive at a much more complete understanding of the social phenomenon.

For post-graduate social science students, it is a lightbulb moment to position the literature review as functioning in this second space. The literature review is our curation of what is known of the substantive issue, of our social phenomenon of interest. What the greenhouse analogy gives us, that other forms of learning may not, is the significance of learning which pane of glass other researchers were looking through, and evaluating their work by asking, ‘did they clean it well enough?’ That is, rather than just describing the findings, and perpetuating ‘what is known’, the student is invited to ask, what do we think we know? And how well do we really know it? This critical element is the crux for us, in transforming a student into the owner of their research. Students learn how to answer questions themselves, rather than relying on someone else’s answers.

It is transformative too, in managing the anxiety that grows alongside a PhD thesis. There is a meme that appears on social media academic-themed feeds, that we leave our undergraduate degree knowing stuff, and we leave the masters knowing we don’t know a lot, and leave our PhD knowing nothing. Here we are giving students a framework by which they can freely see and admit to not knowing it all. They do know something new though, something from a unique perspective. Students now have the tool to focus on their contribution: their approach to cleaning the pane of glass and understanding what they have learned as they looked through it. If they can position their contribution within a whole-career-perspective, it frees them from the pressure they often feel of having to know it all with their first big piece of work.

Conclusion

This paper has aimed to uncover why methods courses have traditionally received poor student feedback and provoked anxiety. In turn, we have presented analogies that in our experience, have helped students engage with the conceptual underpinning of research methods and in so doing, has built confidence in how to approach the learning environment and with it, investment in the skill learning that goes alongside the lectures. Educators are practiced teaching in the qualitative and quantitative silos, and many methods teachers feel competent in teaching on one or the other. In turn, disciplinary teachers often situate themselves in either the qualitative or quantitative camp, and vocally diminish the value of the ‘other’. The greenhouse analogy, in particular, may help ‘the academy’ transition towards mixed methods as students begin their understanding introduced to an overall framework of method. Fusing quantitative and qualitative approaches should help challenge the hierarchy that exists where objective knowledge still carries more ‘authority’ than subjective (a notion that is well contested through the literature, if not always in the classroom).

What needs to happen next in the methods classroom is explicit engagement with power and with ethics. These analogies should prepare students for these debates as they become able to articulate with each methodology rather than the reductionist approach of engaging with quantitative versus qualitative historical debates. Thus, we (teachers and the community of future researchers we have taught) should be closer to considering how best to produce knowledge that acknowledges power. Further, researchers should be better able and more willing to defend the limitations of their work, appreciating that all research is a partial account of ‘the truth’.

Acknowledgements

Thank you to the reviewers Miriam Snellgrove and Ashley Rogers who certainly strengthened this paper. Thanks too to all the students who have provided feedback over the years.

References

Aubusson, P. (2002). Using Metaphor to Make Sense and Build Theory in Qualitative Analysis. The Qualitative Report, 7(4). https://core.ac.uk/download/pdf/51087285.pdf#:~:text=Using%20Metaphor%20to%20Make%20Sense%20and%20Build%20Theory,and%20follows%20unexpected%20paths%20it%20often%20generates%20unanticipated

Büchele, S. (2021). Evaluating the link between attendance and performance in higher education: the role of classroom engagement dimensions. Assessment & Evaluation in Higher Education, 46(1), 135-150. https://doi.org/10.1080/02602938.2020.1754330

Buckley, J., Brown, M., Thomson, S., Olsen, W., & Carter, J. (2015). Embedding quantitative skills in the social science curriculum: Case studies from Manchester. International Journal of Social Research Methodology, 18(5), 495-510. https://doi.org/10.1080/13645579.2015.1062624

Carter, J. (2021). Work placements, internships & applied social research. Sage.

Coronel Llamas, J. M., & Boza, A. (2011). Teaching research methods for doctoral students in education: learning to enquire in the university. International Journal of Social Research Methodology, 14(1), 77-90. https://doi.org/10.1080/13645579.2010.492136

D'Andrea, V. (2006). Exploring methodological issues related to pedagogical inquiry in higher education. New Directions for Teaching and Learning, 107(3), 89-98. https://doi.org/10.1002/tl.248

Earley, M. A. (2014). A synthesis of the literature on research methods education. Teaching in Higher Education, 19, 242-253. https://doi.org/10.1080/13562517.2013.860105

Evans, D. (2003). Hierarchy of evidence: A framework for ranking evidence evaluating healthcare interventions. Journal of Clinical Nursing, 12, 77-84. https://doi.org/10.1046/j.1365-2702.2003.00662.x

Ferrie, J., Wain, M., Gallacher, S., Brown, E., Allinson, R., Kolarz, P., MacInnese, J., Sutinen, L., & Cimatti, R. (2022). Scoping the skills needs in the social sciences to support data driven research. Technopolis Group. https://www.ukri.org/wp-content/uploads/2022/10/ESRC-171022-ScopingTheSkillsNeedsInTheSocialSciencesToSupportDataDrivenResearch.pdf

Garner, M., Wagner, C., & Kawulich, B. (2009). Teaching research methods in the social sciences. Ashgate.

Hesse-Biber, S. (2015). The problems and prospects in the teaching of mixed methods research. International Journal of Social Research Methodology, 18(5), 463-477. https://doi.org/10.1080/13645579.2015.1062622

Hodgen, J., Pepper, D., & London, C. (2010). Is the UK an outlier? Nuffield Foundation. https://www.nuffieldfoundation.org/wp-content/uploads/2019/12/Is-the-UK-an-Outlier_Nuffield-Foundation_v_FINAL.pdf

Humphreys, M. (2006). Teaching qualitative research methods: I'm beginning to see the light. Qualitative Research in Organizations and Management, 1(3), 173-188. https://doi.org/10.1108/17465640610718770

Kilburn, D., Nind, M., & Wiles, R. (2014). Learning as researchers and teachers: The development of a pedagogical culture for social science research methods? British Journal of Educational Studies, 62(2), 191-207. https://doi.org/10.1080/00071005.2014.918576

MacInnes, J. (2014). Teaching quantitative methods. Enhancing Learning in the Social Sciences, 6(2), 1-5. https://doi.org/10.11120/elss.2014.00038

MacInnes, J. (2018). Effective teaching practice in Q-Step Centres. Nuffield Foundation. https://www.nuffieldfoundation.org/sites/default/files/files/Effective%20teaching%20practice%20in%20Q-Step%20Centres_FINAL.pdf

Mann, R., & Warr, D. (2017). Using metaphor and montage to analyse and synthesise diverse qualitative data: exploring the local worlds of 'early school leavers'. International Journal of Social Research Methodology, 20(6), 547-558. https://doi.org/10.1080/13645579.2016.1242316

Markham, W. (1991). Research methods in the introductory course: to be or not to be? Teaching Sociology, 19, 464-471. https://doi.org/10.2307/1317888

McVie, S., Coxon, A., Hawkins, P., Palmer, J., & Rice, R. (2008). ESRC/SFC Scoping study into quantitative methods capacity building in Scotland. Final Report. https://www.sccjr.ac.uk/project/scoping-study-into-quantitative-methods-capacity-building-in-scotland/

Morgan, D. L. (2007). Paradigms lost and pragmatism regained: Methodological implications of combining qualitative and quantitative methods. Journal of Mixed Methods Research, 1, 48-76. https://doi.org/10.1177/2345678906292462

Nind, M., & Lewthwaite, S. (2018). Methods that teach: Developing pedagogic research methods, developing pedagogy. International Journal of Research & Method in Education, 41(4), 398-410. https://doi.org/10.1080/1743727X.2018.1427057

Purdam, K. (2016). Task-based learning approaches for supporting the development of social science researchers' critical data skills. International Journal of Social Research Methodology, 19(2), 257-267. https://doi.org/10.1080/13645579.2015.1102453

Rosemberg, C., Allison, R., De Scalzi, M., Bryan, B., Farla, K., Dobson, C., Cimatti, R., Wain, M., & Javorka, Z. (2022). Evaluation of the Q-Step programme. Technopolis Group. https://www.technopolis-group.com/wp-content/uploads/2022/05/Q-Step-evaluation-report-May-2022.pdf

Ryan, M., Saunders, C., Rainsford, E., & Thompson, E. (2014). Improving research methods teaching and learning in politics and international relations. Politics, 34, 85-97. https://doi.org/10.1111/1467-9256.12020

Scott Jones, J., & Goldring, J. E. (2014). Skills in mathematics and statistics in sociology and tackling transition. Advance HE. https://www.advance-he.ac.uk/knowledge-hub/skills-mathematics-and-statistics-sociology-and-tackling-transition

Scott Jones, J., & Goldring, J. E. (2015). ‘I’m not a quants person’; Key strategies in building competence and confidence in staff who teach quantitative research methods. International Journal of Social Research Methodology, 18, 479-494. https://doi.org/10.1080/13645579.2015.1062623

Skemp, R. R. (1976). Relational understanding and instrumental understanding. Mathematics Teaching, 77, 20-26.

Wagner, C., Garner, M., & Kawulich, B. (2011). The state of the art of teaching research methods in the social sciences: Towards a pedagogical culture. Studies in Higher Education, 36, 75-88. https://doi.org/10.1080/03075070903452594

Wathan, J., Brown, M., & Williamson, L. (2011). Understanding and overcoming the barriers increasing secondary analysis in undergraduate dissertations. In J. Payne & M. Williams (Eds.), Teaching quantitative methods: Getting the basics right (pp. 121-141). Sage. https://doi.org/10.4135/9781446268384.n8

Williams, M., Collett, C., & Rice, R. (2004). Baseline study of quantitative methods in British sociology. https://www.britsoc.co.uk/files/CSAPBSABaselineStudyofQuantitativeMethodsinBritishSociologyMarch2004.pdf

Williams, M., Payne, G., Hodgkinson, L., & Poade, D. (2008). Does British sociology count? Sociology students’ attitudes toward quantitative methods. Sociology 42, 1003-1021. https://doi.org/10.1177/0038038508094576